Often the only way to get WHATWG URL support!

---

- Long URLs: `http://nodejs.org:89/docs/latest/api/foo/bar/qua/13949281/0f28b//5d49/b3020/url.html#test?payload1=true&payload2=false&test=1&benchmark=3&foo=38.38.011.293&bar=1234834910480&test=19299&3992&key=f5c65e1e98fe07e648249ad41e1cfdb0`

most browsers, JavaScript runtimes ; curl, runtime libraries $\to$ RFC 3986

PHP (`parse_url`): naive processing (no validation, no normalization)

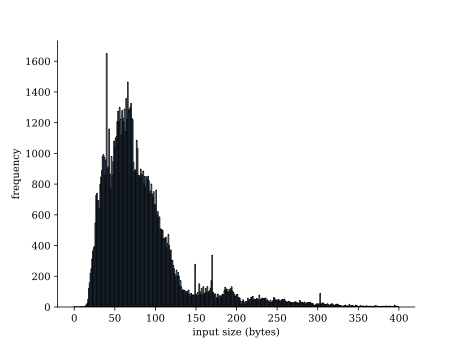

# How long are URLs?  https://github.com/ada-url/url-various-datasets/tree/main/top100 --- # How long does it take to parse a URL on average? curl 7.81.0 (RFC 3986), written in C - 18 000 instructions/URL - 7 100 cycles/URL

Compilers may do it for you, but not always.

--- # URL parsing no longer a bottleneck in Node 20 | node version | request/second (simple) | request/second (href) | gap | |--------------|-----------|------------|----| | 20.1 | 61k | 59k | 3% |